Listening or lip-reading? It’s down to brainwaves

Researchers supported by the Swiss National Science Foundation have discovered that neural oscillations determine whether the brain chooses eyes or ears to interpret speech.

To decipher what a person is telling us, we rely on what we hear as well as on what we see by observing lip movements and facial expressions. Until now, we did not know how the brain chooses between auditory and visual stimuli. But a research group funded by the Swiss National Science Foundation (SNSF) recently showed that neural oscillations (brainwaves) are involved in this process. More precisely, it is the phase of these waves – i.e. the point in the wave cycle just before a specific instant – that determines which sensory channel will contribute most to the perception of speech. The results of this study, led by neurologist Pierre Mégevand of the University of Geneva, have just been published in the journal Science Advances (*).

Audiovisual illusions

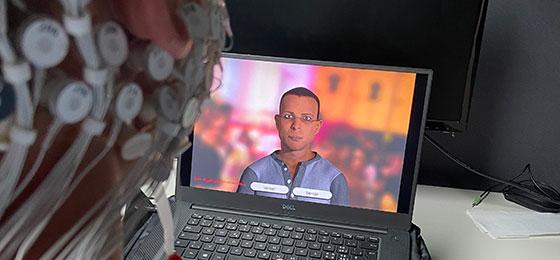

In conducting their study, Pierre Mégevand and his colleagues Raphaël Thézé and Anne-Lise Giraud used an innovative device based on audiovisual illusions. Subjects were placed in front of a screen on which a virtual character uttered phrases in French that could be misinterpreted, such as “Il n’y a rien à boire / Il n’y a rien à voir” (“There’s nothing to drink / There’s nothing to see” – an example in English would be: “The item was in the vase/base”). In some of the statements spoken by the virtual character, the researchers introduced a conflict between what the subjects saw and what they heard. For example, the character pronounced a “b”, but her lips formed a “v”. The subjects were asked to repeat the statement they had understood while electrodes recorded their brain’s electrical activity.

The researchers observed that when the auditory and visual information matched, the subjects repeated the correct statement most of the time. However, in the event of a conflict, subjects relied either on the auditory cue or the visual cue, depending. For example, when they heard a “v” but saw a “b”, the auditory cue dominated perception in about two-thirds of cases. In the opposite situation, the visual cue guided perception.

The sensory channel is determined in advance

The researchers compared these results with the brain’s electrical activity. They observed that about 300 milliseconds preceding agreement or conflict between the auditory and visual information, the phase of the brainwave in the posterior temporal and occipital cortex differed between subjects who had relied on the visual cue and those who had relied on the auditory cue.

“We have known since the 1970s that in certain situations, the brain seems to choose visual cues over auditory cues, and even more so when the auditory signal is impeded, for example when there is ambient noise. We can now show that brainwaves are involved in this process. However, their exact role is still a mystery,” says Mégevand.

Very accurate results regarding the localisation of brain activity

In this experiment, each statement was uttered in turn by six virtual characters against background noise that hindered auditory comprehension. At the end of each of the 240 statements of the experiment, the subjects had one second to repeat what they had understood.

Twenty-five subjects initially took part in the experiment. However, only 15 recordings of brain activity were useable. For these kinds of studies, that’s a relatively small number. But, says Mégevand, “we were lucky in that a subject equipped with intracranial electrodes – because suffering from epilepsy – joined the experiment, which allowed us to obtain very accurate results regarding the localisation of brain activity”.

Nonetheless, some results remain to be confirmed. For example, in the study, the link between the wave phase and the perception of speech could only be established in the right cerebral hemisphere. This information is normally received by the left hemisphere.

(*) R. Thézé, A.-L. Giraud, P. Mégevand. The phase of cortical oscillations determines the perceptual fate of visual cues in naturalistic audiovisual speech. Science Advances (2020).https://doi.org/10.1126/sciadv.abc6348

Contact

Supporting independent research

This project benefited from the Swiss National Science Foundation’s Ambizione funding scheme. These grants are aimed at young researchers who wish to conduct, manage and lead an independent project at a Swiss higher education institution. The grants are awarded for a maximum of four years.

Links

- Download image for editorial use: (JPEG)Listening or lip-reading? Brainwaves are involved in this process. © Raphaël Thézé, University of Geneva

- The project in P3, the SNSF’s research database

- SNSF Twitter account