The ethical and legal boundaries of science

The first legal guidelines for clinical research came about because of the improper use of human experiments. Today, science itself sometimes sets boundaries even before legislators see a need for them. By Ori Schipper

Our prosperity is based to a large degree on our curiosity. It is thanks to this urge to find out new things that life expectancy has doubled in the last hundred years. So does this mean that humanity would be well advised to give free rein to its thirst for knowledge and to set no limits to science?

Numerous abuses make it impossible to answer with a naïve ‘yes’. These abuses have played a role in the gradual development of a complex set of regulations that today erects boundaries first and foremost for clinical research. The earliest ethical guidelines were drawn up in 1900. A few years earlier, the dermatologist Albert Neisser had carried out an experiment in which he had infected prostitutes with the pathogen that causes syphilis – but without their knowledge. The debate that this experiment ignited led to the ‘Prussian Directive on Human Experimentation’. This Directive stipulated for the first time ever that subjects must give their consent when participating in research projects. With this emphasis on the right to self-determination on the part of the participant, the Directive was way ahead of its time. In fact, the paternalist doctor-patient relationship was only breached much later, says Sabrina Engel-Glatter from the Institute for Biomedical Ethics at the University of Basel.

This was probably also why the Directive was unable to prevent inhuman experiments from continuing, both before and during the Second World War. In a letter to Heinrich Himmler, for example, Sigmund Rascher, a member of the SS and a doctor at the Dachau concentration camp, complained that "regrettably, no experiments with human material have been carried out here yet, because the experiments are very dangerous and no one is prepared to volunteer for them". He asked whether Himmler might not be able to place a few professional criminals or camp inmates at his disposal. He wanted them for experiments intended to investigate the chances of survival for pilots after parachute jumps or after landing in the cold waters of the English Channel. Later, Rascher asked to be transferred to Auschwitz because the premises were bigger and his experiments would be easier to carry out. The human guinea pigs "who scream when they freeze" would draw less attention to themselves there. His hypothermia experiments killed at least 80 people.

After the War, the USA filed lawsuits against the doctors responsible for the Nazi experiments, and this led in turn to the Nuremberg Code. This ten-point document from 1947 stipulates that a subject’s consent must be given without compulsion or deceit and that it can thereafter be withdrawn at any time. It also demands that experiments must intend to deliver "fruitful results for the good of society". The principles defined in this Code were refined by the World Medical Association and included in the ‘Declaration of Helsinki of 1964’, which states that vulnerable groups such as children, prisoners or the poor, for example, are entitled to more specific protection.

Abuse of research interests

However, these deliberations were only enshrined in law after a further scandal: the infamous Tuskegee syphilis experiment that was carried out on several hundred black agricultural labourers with the aim of determining the long-term effects of the disease. The US Health Department began the study in 1932 and only ended it 40 years later, after a whistle-blower went to the media. Public pressure ultimately led to a hurried end to the experiment. In the belief that the quality of the data would increase with the duration of the experiment, those responsible for it gave their test subjects no effective treatment until the very end, even though one had been available since the late 1940s in the form of penicillin.

In reaction to this publicly funded abuse of research interests, the US Congress set up a National Commission for the Protection of Human Subjects. Its task was to define the basic ethical principles with which researchers had to comply when testing on people. In 1979, the Commission decided upon four principles that were then listed in their ‘Belmont Report’: respect for persons, beneficence, justice and non-maleficence. That same year saw the publication of the ‘Principles of Biomedical Ethics’, based on those same principles. They constituted the first-ever scholarly investigation of the topic and therefore marked the beginning of modern bioethics.

Since then, laws to strengthen patient rights have been introduced all over the world; later, ethics commissions were also instituted. These commissions monitor a research project before it has even begun, to see if subjects are adequately protected and whether the test is thus ethically defensible. "In Switzerland there are various ethics commissions today. They can impose conditions and even reject a project", says Engel-Glatter.

Proactive laws

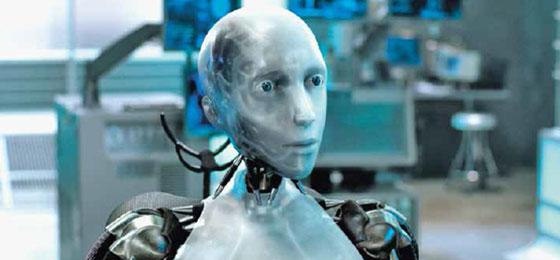

So it seems that clinical research has had to go through a long, painful history to be able to determine what is ethically and legally permissible. But in basic research, the interaction between science and legislation also follows two further patterns. There are proactive laws, such as those that forbid the creation of hybrids between animals and humans or the breeding of human clones. These laws are enacted even before researchers are in a position to carry out such experiments. Then there are cases where scientists set their own boundaries before the legislators even see a need for it. The most famous example is the ‘Conference on Recombinant DNA Molecules’, organised by the US Academy of Sciences, that took place in Asilomar in California in 1975. When researchers had first begun to alter the genetic make-up of bacteria and viruses in the early 1970s, several of them, such as the later Nobel Prize laureate Paul Berg, had realised that they were moving into a sensitive area. They were afraid that genetically altered intestinal bacteria might escape the lab if there were an accident; they might infect people and could cause cancer as a result. For this reason, the scientists declared a moratorium in 1974.

Berg organised the Asilomar conference along with several colleagues. Its main goal, he wrote a few years ago in the journal Nature, was to establish whether the moratorium might be ended – and if so, under what conditions. Although researchers across the world had kept to the moratorium, there were widely differing opinions at the conference when it came to the anticipated risks. Berg noticed that many scientist regarded their own experiments as less dangerous than those of their colleagues.

The breakthrough only came after days and nights of discussion, when the idea of grading risks arose. In other words, an experiment with a pathogen was to be regarded as fundamentally more dangerous than an experiment with a specific strain of bacteria that could only survive in the lab. With this idea, the professionals assembled in Asilomar laid the foundations for legal norms that would later be adopted all over the world.

Thanks to this cautious approach, science succeeded in gaining public trust; of that Berg is convinced. And when the precautionary principle was first applied, the research community – and the booming biotech industry – cleaved a way forward for itself. ‘Asilomar 1975: DNA modification secured’ is the title of Berg’s reminiscences of the conference. Others, such as the science historian Susan Wright, criticise the fact that the conference gathered together almost exclusively molecular biologists so that they could impose a reductionist approach on the final report – one fixated on technological solutions.

Genetic experiments at school

Berg admits that the conference was indeed restricted to safety in the field of genetic engineering, primarily for reasons of time. Today, however, experiments in genetic engineering don’t just take place in high-security labs, but also even in primary schools. It’s ironic then that, in retrospect, the initial fears about safety that were the focus of discussion in Asilomar have since then largely dissolved away. Instead, it’s the religious and legal viewpoints that were excluded at the time that have been gaining in importance. The current controversies surrounding biotechnology are often about the degree to which living creatures or individual genes may be placed under patent protection, or indeed whether we can justify interfering in creation at all.

Research moratoriums are still being declared today. In a case study, Engel-Glatter looks at experiments that have cultivated bird flu viruses. Two research groups, one from the Netherlands, the other from Japan and the USA, looked into whether bird flu might mutate so that it is not just transmitted by contact with birds, but directly between humans. The researchers created viruses that could be transmitted through the air from one mammal to another – and so, to quote the Dutch head of the research team, "belong among the most dangerous viruses that one could create". When the researchers wanted to publish their results two years ago, they prompted intense discussions as to whether their findings should be kept at least partially secret. The idea was to prevent knowledge about pathogens that could potentially trigger a pandemic from falling into the wrong hands.

The scientists declared a voluntary hiatus in their research. As they wrote in the journals

Nature

and

Science, they wanted to explain the usefulness of their work to the rest of the world – and to give organisations and governments the time to revisit their guidelines. Their research results were eventually published during this year-long interval, complete and unexpurgated. But the debate about the benefits and risks of this kind of research won’t stop for a long time, says Engel-Glatter. "In Europe, it’s only just beginning".

Just a few months ago, the European Society for Virology and the German Ethics Council expressed their support for setting up a biosafety commission. Engel-Glatter believes that research funding organisations must also consider these issues. If the conclusion is reached that the potential benefit of a research project does not justify the risks involved, then it is simpler just to refuse it funding right at the start, rather than trying to keep its findings secret

a posteriori.